Formally adopted by the Council of the EU in May 2024, the AI Act[1] aims to enhance the competitiveness of the Single Market, while ensuring the protection of health, safety, and fundamental rights. This comprehensive regulation impacts a wide range of stakeholders including manufacturers, deployers,[2] providers of AI models and systems, who must inventory their AI systems and comply with the legal requirements. Beyond its direct implications, the AI Actalso has a broader societal impact. This article explores the scope and governance structure, as well as pinpointing opportunities for employees and their representatives to engage proactively in the implementation of this landmark piece of legislation.

-

The scope of the AI Act

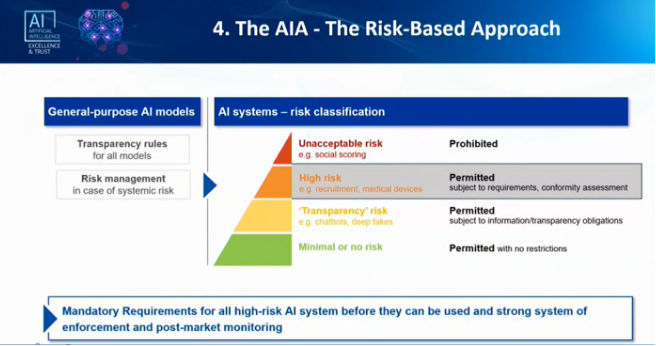

The primary public policy objective of the AI Act is to foster the development, use and uptake of AI in the internal market, while ensuring a high level of health and safety and the protection of fundamental rights (Recital 8, Article 1). To achieve this, the legislator opted for a risk-based approach, underpinned by the new Legislation Framework. This system relies on (technical) harmonized standards that translate the general legal requirements into practical terms (Recitals 9, 47). This ensures that the quality of the product is in the hands of the various actors along the product’s lifecycle chain. The harmonized standards are expected to be published in spring 2025.

The AI Act primarily applies to providers and deployers in both public and private sectors, and has an extraterritorial component applying to providers outside the EU. Central to the regulation is the risk-based governance approach for AI systems, including General Purpose AI systems (GPAIs). The AI Act categorises risks into 5 levels – unacceptable, high, limited, minimal, plus systemic risks for GPAI models, impacting both traditional safety and health but also fundamental rights.

Annex III of the AI Act lists areas considered high-risk. The Commission is empowered to adopt delegated acts to amend Annex III by adding or modifying use-cases of high-risk AI systems (Article 7).

The governance structure is innovative and establishes several new bodies: AI Office, AI Board, Advisory Forum, and scientific panel.The AI Office supports the AI Act, enforces general-purpose AI rules, develops tools, methodologies, and benchmarks to evaluate the capabilities of GPAI models, and decides whether they present a systemic risk (Article 64). Established within the Commission as part of the administrative structure of DG CONNECT, it is expected to be operational in July 2024. It will also focus on compliance, safety, robotics, international affairs, as well as monitor trends and investments, weather modelling, cancer diagnosis and digital twins.

The EU AI Board is a broader advisory body composed of representatives from each Member State, the European Data Protection Supervisor, and other invited experts. The board is tasked with a broad range of technical and non-technical activities, such as advising and assisting the EC and Member States to facilitate the consistent and effective application of the AI Act. It will collect and share technical expertise, establish sub-groups to provide a platform for exchange among market surveillance authorities and notifying authorities. It will promote AI literacy, public awareness and understanding of the benefits, risks, among other tasks (Articles 65-66).

The Advisory Forum will provide technical expertise and advise the Board and the EC. It is membership-based and brings together bodies such as the Fundamental Rights Agency, ENISA, the European Committee for Standardization (CEN), the European Committee for Electrotechnical Standardization (CENELEC), and the European Telecommunications Standards Institute (ETSI). It will be based on a balanced selection of stakeholders, including industry, start-ups, SMEs, academia, civil society, and social partners. The Advisory Forum may prepare opinions, recommendations and written contributions at the request of the Board or the EC (Recital 150, Article 67). An opportunity exists for social partners to gradually better define their exact contribution within the Advisory forum and play an active role.

Another body is the scientific panel of independent experts selected by the EC. The scientific panel will advise the AI Office in the support of enforcement (Article 68).

Last but not least, these structures may work in close collaboration with the European Centre for Algorithmic Transparency.

At national level, each Member State will designate at least one notifying authority and one market surveillance authority for the purposes of the AI Act (Article 70). The market surveillance authority is in charge of enforcement and informed about infringements to the Regulation. The notifying authority is in charge of designating conformity assessment bodies. Member States are now reflecting on the requirements related to the designation of these authorities, which criteria should be taken into account to carry out their mandate, what resources should be allocated to this operation, and how these new bodies will collaborate with existing ones. Societal stakeholders can investigate how these authorities are going to be shaped and organised in their own countries and whether they can be involved in any process or consultation.

It is worth mentioning that the AI Act is not a standalone initiative, but part of a broader regulatory ‘infrastructure’ established by the EU with a focus on investment. This includes a Coordinated Plan on AI to accelerate investments in AI technologies in Member States, financial measures to support European startups and SMEs, the development of Common European Data Spaces, and an AI Pact that seeks the industry’s voluntary commitment to implement the legal requirements of the AI Act, ahead of the legal deadline. In workplaces, workers representatives could use this opportunity to invite employers, as providers or developers of AI systems, to adopt the legal requirements sooner.

-

The dynamics of international governance on AI

In the international push to regulate AI, several instruments have been developed almost in parallel. This section briefly addresses their interlinkages and mutual influence.

First, the AI Act has been developed in close cooperation with other global organisations. Notably, the cooperation with the OECD stands out, as the EU institutions imported the definition of AI systems that the OECD updated during the AI Act trilogues. The OECD also reviewed and updated certain elements of the principles for trustworthy AI, which were crucial in the definition. Strategic cooperation is also a focus of the EU-US Trade and Technology Council, where discussions address standardisation issues.

A second observation is that the EU, the Council of Europe, the OECD, and the UN all took the initiative of drafting regulatory instruments concurrently. Given their overlapping membership, mutual influence was unavoidable. For example, the OECD’s AI Principles look very similar to the EU’s AI Act legal requirements. The UN General Assembly’s resolution ‘Seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development’, shares the core aim of promoting safe, secure, and trustworthy AI systems, akin to the objectives of the AI Act.

A third observation is that the production of some regulatory instruments did not entirely follow the traditional lawmaking process. For example, the negotiation of the Convention on Artificial Intelligence, Human Rights, Democracy, and the Rule of Law, by the Council of Europe, was opened to non-members such as the United States, the United Kingdom, Canada, Israel, and Japan. Despite having observer status, these countries managed to influence the negotiations with their active participation. In the EU, following tough negotiations, the EU legislators managed to insert an additional chapter to include General Purpose AI models, which were not part of the original proposal of the EU Commission.

These examples show that AI governance is not only about technology but is influenced by highly political and economic interests. In this complex ecosystem, there is a valuable opportunity for the diversity of societal stakeholders to be proactive in getting involved in the implementation of these AI governance frameworks.

-

Opportunities for action at the workplace

The EC and national competent authorities are committed to ‘ensuring the involvement of stakeholders in the implementation and application’ of the AI Act. Additionally, there is a financial incentive for compliance, as Member States can impose dissuasive penalties, if and when AI systems do not meet the legal requirements.

This commitment, combined with the avoidance of financial and administrative penalty, can serve as leverage for societal stakeholders, who can use the regulation to advance their prevention and protection strategies, and advocate for safer and sustainable AI systems:

A) Worker Involvement in Risk Assessment.

AI can generate risks and cause material or immaterial harm (physical, psychological, societal or economic) to public interests and fundamental rights (Recitals 5-7). Workers and their representatives have several rights in this context. When an AI system is implemented at work, information to workers and their representatives is a prerequisite (Recital 92, Article 26.7). Here, they can proactively be involved in conducting risk assessments.

B) Harmonized Standards and Trade Union Involvement.

In developing harmonized standards, the EC intends to ‘seek to promote investment and innovation in AI’, through increasing competitiveness and the growth of the EU market. This requires a balanced representation of interests and effective participation of relevant stakeholders in accordance with EU Standardisation Regulation 1025/2012 (Article 40). Here, national trade unions, through the European Trade Union Confederation, can actively participate to the EU standardisation process, although with no voting capacity.

C) Education and AI literacy.

Employers deploying AI systems need to establish human oversight to support informed decision making. They must educate their employees ‘about the intended and precluded uses, and use the high-risk AI system correctly and as appropriate’ (Recital 72). Employers should inform employees about the performance regarding specific persons on which the system is intended to be used (Article 13). Worker representatives must be informed, understand the instructions of use, and be able to signal possible risks, harms and inappropriate use. They also need to increase their level of education, and be actively involved in risk management practices. Appropriate education and AI literacy is an essential requirement that employers need to provide.

D) Involvement in human oversight

An essential legal requirement entails implementing human oversight measures to properly understand the relevant capacities and limitations of high-risk AI (Article 14). Employees could be part of such oversight. Educated employees can detect when the AI system is not being used for the intended purpose, anomalies, dysfunctions and unexpected performance. They should acquire sufficient literacy to be aware of the risk of over-reliance on AI outputs and demand precaution and prevention measures. Employers should provide all documentation, including the instructions for use, with comprehensive, accessible and understandable information, taking into account the needs and foreseeable knowledge of the employees using the systems (Recital 72).

E) Participation in Codes of Conduct

The AI Office and member states will encourage the development of codes of conduct for the voluntary application of specific requirements, including ‘promoting AI literacy, in particular that of persons dealing with AI development, operation and use, assessing and preventing negative impacts on vulnerable people, and promoting stakeholder participation in the process’ (Article 95). Employees and their representatives can use this provision and ask employers to develop effective codes of conduct in a participatory manner.

Finally, Article 2.4.11 states that the AI Act does not preclude the EU or Member States ‘from maintaining or introducing laws, regulations or administrative provisions which are more favourable to workers in terms of protecting their rights, regarding the use of AI systems by employers’. Also, it does not preclude encouraging or allowing the application of collective agreements which are more favourable to employees. This is a forward-looking provision which opens the door to future legislative work on AI by the next EU Commission. Such work could help to fill gaps in the legislation and is an opportunity to propose an instrument dedicated to AI at work.

____________________

Footnotes

[1] The common name is the AI Act, but legally it will be a regulation.

[2] The Act defines “deployers” as: “a natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity”